Is There A Fake Youtube About Young Rape Victi! With Hillary

Y ouTube'due south recommendation system draws on techniques in automobile learning to make up one's mind which videos are car-played or announced "up side by side". The precise formula it uses, however, is kept hugger-mugger. Amass data revealing which YouTube videos are heavily promoted by the algorithm, or how many views individual videos receive from "upward next" suggestions, is also withheld from the public.

Disclosing that data would enable bookish institutions, fact-checkers and regulators (besides as journalists) to assess the type of content YouTube is well-nigh likely to promote. By keeping the algorithm and its results under wraps, YouTube ensures that any patterns that indicate unintended biases or distortions associated with its algorithm are concealed from public view.

By putting a wall around its data, YouTube, which is owned by Google, protects itself from scrutiny. The reckoner plan written by Guillaume Chaslot overcomes that obstacle to strength some degree of transparency.

The ex-Google engineer said his method of extracting data from the video-sharing site could not provide a comprehensive or perfectly representative sample of videos that were beingness recommended. But information technology can give a snapshot. He has used his software to detect YouTube recommendations across a range of topics and publishes the results on his website, algotransparency.org.

How Chaslot'due south software works

The program simulates the behaviour of a YouTube user. During the election, it acted equally a YouTube user might have if she were interested in either of the 2 main presidential candidates. It discovered a video through a YouTube search, and and then followed a concatenation of YouTube–recommended titles appearing "up next".

Chaslot programmed his software to obtain the initial videos through YouTube searches for either "Trump" or "Clinton", alternating between the two to ensure they were each searched l% of the fourth dimension. Information technology and then clicked on several search results (ordinarily the summit five videos) and captured which videos YouTube was recommending "up next".

The procedure was then repeated, this time by selecting a sample of those videos YouTube had just placed "up next", and identifying which videos the algorithm was, in turn, showcasing beside those. The process was repeated thousands of times, collating more and more layers of information about the videos YouTube was promoting in its conveyor belt of recommended videos.

By design, the program operated without a viewing history, ensuring it was capturing generic YouTube recommendations rather than those personalised to individual users.

The information was probably influenced past the topics that happened to be trending on YouTube on the dates he chose to run the program: 22 August; eighteen and 26 Oct; 29-31 Oct; and 1-7 November.

On about of those dates, the software was programmed to begin with five videos obtained through search, capture the beginning 5 recommended videos, and repeat the process v times. Only on a scattering of dates, Chaslot tweaked his plan, starting off with three or iv search videos, capturing three or four layers of recommended videos, and repeating the process upwards to six times in a row.

Whichever combinations of searches, recommendations and repeats Chaslot used, the program was doing the same matter: detecting videos that YouTube was placing "upward next" as enticing thumbnails on the right-mitt side of the video player.

His program likewise detected variations in the degree to which YouTube appeared to exist pushing content. Some videos, for example, appeared "upwards next" beside merely a handful of other videos. Others appeared "up next" beside hundreds of different videos beyond multiple dates.

In total, Chaslot's database recorded 8,052 videos recommended by YouTube. He has fabricated the code behind his plan publicly available here. The Guardian has published the full list of videos in Chaslot'southward database here.

Content analysis

The Guardian'south research included a wide written report of all 8,052 videos as well as a more focused content analysis, which assessed ane,000 of the top recommended videos in the database. The subset was identified by ranking the videos, first by the number of dates they were recommended, and then by the number of times they were detected appearing "up adjacent" beside another video.

We assessed the top 500 videos that were recommended later a search for the term "Trump" and the top 500 videos recommended after a "Clinton" search. Each private video was scrutinised to determine whether it was obviously partisan and, if so, whether the video favoured the Republican or Democratic presidential campaign. In social club to judge this, we watched the content of the videos and considered their titles.

About a tertiary of the videos were deemed to be either unrelated to the election, politically neutral or comparatively biased to warrant existence categorised equally favouring either campaign. (An example of a video that was unrelated to the election was i entitled "10 Intimate Scenes Actors Were Embarrassed to Picture show"; an example of a video deemed politically neutral or even-handed was this NBC News circulate of the second presidential debate.)

Many mainstream news clips, including ones from MSNBC, Fob and CNN, were judged to fall into the "fifty-fifty-handed" category, as were many mainstream comedy clips created by the likes of Saturday Night Live, John Oliver and Stephen Colbert.

Formulating a view on these videos was a subjective process but for the almost office information technology was very obvious which candidate videos benefited. There were a few exceptions. For case, some might consider this CNN prune, in which a Trump supporter forcefully defended his lewd remarks and strongly criticised Hillary Clinton and her hubby, to be beneficial to the Republican. Others might point to the CNN ballast's exasperated response, and argue the video was really more than helpful to Clinton. In the end, this video was too difficult for us categorise. It is an instance of a video listed equally not benefiting either candidate.

For two-thirds of the videos, notwithstanding, the process of judging who the content benefited was relatively uncomplicated. Many videos conspicuously leaned toward ane candidate or the other. For example, a video of a speech in which Michelle Obama was highly critical of Trump's handling of women was deemed to take leaned in favour of Clinton. A video falsely claiming Clinton suffered a mental breakdown was categorised as benefiting the Trump campaign.

Nosotros institute that most of the videos labeled as benefiting the Trump campaign might exist more accurately described equally highly critical of Clinton. Many are what might exist described every bit anti-Clinton conspiracy videos or "false news". The database appeared highly skewed toward content critical of the Democratic nominee. But for the purpose of categorisation, these types of videos, such as a video entitled "WHOA! HILLARY THINKS Camera'Southward OFF… SENDS Daze MESSAGE TO TRUMP", were listed as favouring the Trump campaign.

Missing videos and bias

Roughly half of the YouTube-recommended videos in the database have been taken offline or made private since the ballot, either considering they were removed by whoever uploaded them or because they were taken down by YouTube. That might exist considering of a copyright violation, or because the video contained some other alienation of the company'south policies.

Nosotros were unable to lookout original copies of missing videos. They were therefore excluded from our first circular of content assay, which included only videos we could watch, and concluded that 84% of partisan videos were beneficial to Trump, while just 16% were benign to Clinton.

Interestingly, the bias was marginally larger when YouTube recommendations were detected following an initial search for "Clinton" videos. Those resulted in 88% of partisan "Upwardly adjacent" videos being beneficial to Trump. When Chaslot's program detected recommended videos later a "Trump" search, in contrast, 81% of partisan videos were favorable to Trump.

That said, the "Up next" videos post-obit from "Clinton" and "Trump" videos oftentimes turned out to be the same or very similar titles. The type of content recommended was, in both cases, overwhelmingly beneficial to Trump, with a surprising corporeality of conspiratorial content and fake news damaging to Clinton.

Supplementary count

After counting simply those videos we could sentinel, nosotros conducted a second assay to include those missing videos whose titles strongly indicated the content would have been benign to i of the campaigns. Information technology was besides often possible to notice duplicates of these videos.

Ii highly recommended videos in the database with ane-sided titles were, for instance, entitled "This Video Will Get Donald Trump Elected" and "Must Watch!! Hillary Clinton tried to ban this video". Both of these were categorised, in the second round, as beneficial to the Trump campaign.

When all one,000 videos were tallied – including the missing videos with very slanted titles – we counted 643 videos had an obvious bias. Of those, 551 videos (86%) favoured the Republican nominee, while but 92 videos (14%) were beneficial to Clinton.

Whether missing videos were included in our tally or not, the conclusion was the same. Partisan videos recommended by YouTube in the database were about vi times more than probable to favour Trump'southward presidential campaign than Clinton'due south.

Database analysis

All eight,052 videos were ranked past the number of "recommendations" – that is, the number of times they were detected appearing every bit "Upwardly next" thumbnails beside other videos. For instance, if a video was detected appearing "Up side by side" beside four other videos, that would be counted as four "recommendations". If a video appeared "Up next" abreast the same video on, say, three split up dates, that would exist counted as three "recommendations". (Multiple recommendations between the same videos on the same 24-hour interval were not counted.)

Here are the 25 near recommended videos, according to the above metric.

Chaslot's database also independent information the YouTube channels used to broadcast videos. (This information was but partial, because it was not possible to identify channels backside missing videos.) Here are the top x channels, ranked in order of the number of "recommendations" Chaslot'due south program detected.

Campaign Speeches

Nosotros searched the entire database to place videos of full entrada speeches past Trump and Clinton, their spouses and other political figures. This was done through searches for the terms "spoken communication" and "rally" in video titles followed past a check, where possible, of the content. Here is a list of the videos of campaign speeches institute in the database.

Graphika assay

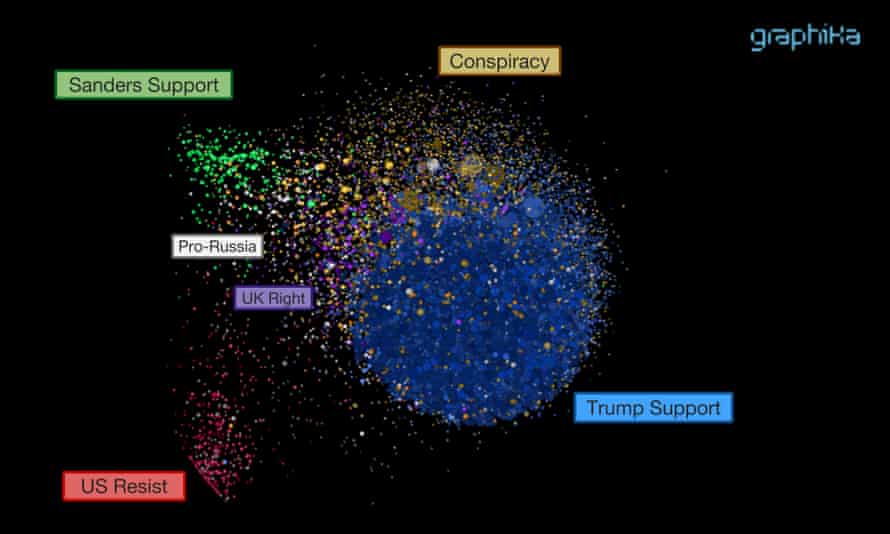

The Guardian shared the entire database with Graphika, a commercial analytics firm that has tracked political disinformation campaigns. The company merged the database of YouTube-recommended videos with its own dataset of Twitter networks that were agile during the 2016 ballot.

The company discovered more than 513,000 Twitter accounts had tweeted links to at to the lowest degree one of the YouTube-recommended videos in the six months leading up to the election. More than 36,000 accounts tweeted at least i of the videos x or more times. The almost active nineteen of these Twitter accounts cited videos more than 1,000 times – evidence of automated action.

"Over the months leading up to the election, these videos were clearly additional by a vigorous, sustained social media entrada involving thousands of accounts controlled by political operatives, including a large number of bots," said John Kelly, Graphika's executive director. "The most numerous and best-connected of these were Twitter accounts supporting President Trump's campaign, but a very active minority included accounts focused on conspiracy theories, support for WikiLeaks, and official Russian outlets and alleged disinformation sources."

Kelly then looked specifically at which Twitter networks were pushing videos that we had categorised every bit beneficial to Trump or Clinton. "Pro-Trump videos were pushed past a huge network of pro-Trump accounts, assisted by a smaller network of dedicated pro-Bernie and progressive accounts. Connecting these ii groups and also pushing the pro-Trump content were a mix of conspiracy-oriented, 'Truther', and pro-Russian federation accounts," Kelly concluded. "Pro-Clinton videos were pushed by a much smaller network of accounts that now identify as a 'resist' movement. Far more than of the links promoting Trump content were repeat citations by the aforementioned accounts, which is characteristic of automatic amplification."

Finally, we shared with Graphika a subset of a dozen videos that were both highly recommended by YouTube, according to the higher up metrics, and particularly egregious examples of fake or divisive anti-Clinton video content. Kelly said he found "an unmistakable pattern of coordinated social media amplification" with this subset of videos.

The tweets promoting them nearly always began subsequently midnight the day of the video'south appearance on YouTube, typically betwixt 1am and 4am EDT, an odd time of the nighttime for US citizens to be first noticing videos. The sustained tweeting continued "at a more or less even charge per unit" for days or weeks until ballot day, Kelly said, when it suddenly stopped. That would point "articulate evidence of coordinated manipulation", Kelly added.

YouTube argument

YouTube provided the following response to this enquiry:

"We have a keen deal of respect for the Guardian as a news outlet and institution. We strongly disagree, however, with the methodology, information and, well-nigh importantly, the conclusions made in their research," a YouTube spokesperson said. "The sample of 8,000 videos they evaluated does non paint an accurate picture of what videos were recommended on YouTube over a year ago in the run-upwards to the US presidential election."

"Our search and recommendation systems reflect what people search for, the number of videos available, and the videos people choose to lookout on YouTube," the continued. "That's not a bias towards any particular candidate; that is a reflection of viewer involvement." The spokesperson added: "Our only determination is that the Guardian is attempting to shoehorn research, data, and their incorrect conclusions into a mutual narrative almost the role of engineering in concluding yr'due south election. The reality of how our systems work, nonetheless, simply doesn't support that premise."

Last week, it emerged that the Senate intelligence committee wrote to Google demanding to know what the company was doing to forestall a "malign incursion" of YouTube's recommendation algorithm – which the peak-ranking Democrat on the committee had warned was "particularly susceptible to strange influence". The following day, YouTube asked to update its argument.

"Throughout 2017 our teams worked to improve how YouTube handles queries and recommendations related to news. We made algorithmic changes to better surface clearly-labeled authoritative news sources in search results, peculiarly around breaking news events," the statement said. "We created a 'Breaking News' shelf on the YouTube homepage that serves upwardly content from reliable news sources. When people enter news-related search queries, we prominently brandish a 'Top News' shelf in their search results with relevant YouTube content from authoritative news sources."

It continued: "We also take a tough stance on videos that do not clearly violate our policies but comprise inflammatory religious or supremacist content. These videos are placed behind an alert interstitial, are non monetized, recommended or eligible for comments or user endorsements."

"We appreciate the Guardian'southward work to shine a spotlight on this challenging result," YouTube added. "Nosotros know there is more to exercise here and nosotros're looking forward to making more announcements in the months alee."

- Read the full story: how YouTube'due south algorithm distorts truth

The in a higher place research was conducted past Erin McCormick, a Berkeley-based investigative reporter and one-time San Francisco Chronicle database editor, and Paul Lewis, the Guardian'south w coast bureau chief and former Washington correspondent.

Source: https://www.theguardian.com/technology/2018/feb/02/youtube-algorithm-election-clinton-trump-guillaume-chaslot

Posted by: gouldsump1974.blogspot.com

0 Response to "Is There A Fake Youtube About Young Rape Victi! With Hillary"

Post a Comment